Drivers declare the supported audio signal processing modes for each device.

- AMA: long as troll.

- Visits to drudge 2/04/2021 024,072,136 past 24 hours 794,415,257 past 31 days 10,370,731,904 past year.

If a different device is checked, select and “Secondary click” on “PMA-60”, and then select “Use this device for sound output”. Select the “PMA-60” format. It is normally recommended to set the format to “384000.0 Hz” and “2ch-32 bit Interger”.

Available Signal Processing Modes

Audio categories (selected by applications) are mapped to audio modes (defined by drivers). Windows defines seven audio signal processing modes. OEMs and IHVs can determine which modes they want to implement. It is recommended that IHVs/OEMs utilize the new modes to add audio effects that optimize the audio signal to provide the best user experience. The modes are summarized in the table shown below.

| Mode | Render/Capture | Description |

|---|---|---|

| Raw | Both | Raw mode specifies that there should not be any signal processing applied to the stream. An application can request a raw stream that is completely untouched and perform its own signal processing. |

| Default | Both | This mode defines the default audio processing. |

| Movies* | Render | Movie audio playback |

| Media* | Both | Music audio playback (default for most media streams) |

| Speech* | Capture | Human voice capture (e.g. input to Cortana) |

| Communications* | Both | VOIP render and capture (e.g. Skype, Lync) |

| Notification* | Render | Ringtones, alarms, alerts, etc. |

* New in Windows 10.

Signal Processing Mode Driver Requirements

Audio device drivers need to support at least the Raw or Default mode. Supporting additional modes is optional.

It is possible that not all modes might be available for a particular system. Drivers define which signal processing modes they support (i.e. what types of APOs are installed as part of the driver) and inform the OS accordingly. If a particular mode is not supported by the driver, then Windows will use the next best matching mode.

The following diagram shows a system that supports multiple modes:

Windows Audio Stream Categories

In order to inform the system about the usage of an audio stream, applications have the option to tag the stream with a specific audio stream category. Applications can set the audio category, using any of the audio APIs, just after creating the audio stream. In Windows 10 there are nine audio stream categories.

| Category | Description |

|---|---|

| Movie | Movies, video with dialog (Replaces ForegroundOnlyMedia) |

| Media | Default category for media playback (Replaces BackgroundCapableMedia) |

| Game Chat | In-game communication between users (New category in Windows 10) |

| Speech | Speech input (e.g. personal assistant) and output (e.g. navigation apps) (New category in Windows 10) |

| Communications | VOIP, real-time chat |

| Alerts | Alarm, ring tone, notifications |

| Sound Effects | Beeps, dings, etc |

| Game Media | In game music |

| Game Effects | Balls bouncing, car engine sounds, bullets, etc. |

| Other | Uncategorized streams |

As mentioned previously, audio categories (selected by applications) are mapped to audio modes (defined by drivers). Applications can tag each of their streams with one of the 10 audio categories.

Applications do not have the option to change the mapping between an audio category and a signal processing mode. Applications have no awareness of the concept of an 'audio processing mode'. They cannot find out what mode is used for each of their streams.

WASAPI Code Sample

The following WASAPI code from the WASAPIAudio sample shows how to set different audio categories.

Signal Processing Modes and Effects

OEMs define what effects will be used for each mode. Windows defines a list of seventeen types of audio effects.

For information on how to associate APOs with modes, see Implementing Audio Processing Objects.

It is possible for applications to ask what effects would be applied to a specific stream for either RAW or non- RAW processing. Applications can also ask to be notified when the effects or raw processing state change. The application may use this information to determine if a specific streaming category like communication is available, or if only RAW mode is in use. If only RAW mode is available, the application can determine how much audio processing of its own to add.

If System.Devices.AudioDevice.RawProcessingSupported is true, applications also have the option to set a 'use RAW' flag on certain streams. If System.Devices.AudioDevice.RawProcessingSupported is false, applications cannot set the 'use RAW' flag.

Applications have no visibility into how many modes are present, with the exception of RAW/non-RAW.

Applications should request the optimal audio effect processing, regardless of the audio hardware configuration. For example, tagging a stream as Communications will let Windows know to pause background music.

For more information about the static audio stream categories, see AudioCategory enumeration and MediaElement.AudioCategory property.

CLSIDs for System Effects

FX_DISCOVER_EFFECTS_APO_CLSID

This is the CLSID for the MsApoFxProxy.dll “proxy effect” which queries the driver to get the list of active effects;

KSATTRIBUTEID_AUDIOSIGNALPROCESSING_MODE

KSATTRIBUTEID_AUDIOSIGNALPROCESSING_MODE is an identifier to Kernel Streaming that identifies that the specific attribute that is being referenced, is the signal processing mode attribute.

The #define statements shown here, are available in the KSMedia.h header file.

KSATTRIBUTEID_AUDIOSIGNALPROCESSING_MODE is used to by mode aware drivers with a KSDATARANGE structure which contain a KSATTRIBUTE_LIST. This list has a single element in it which is a KSATTRIBUTE. The Attribute member of the KSATTRIBUTE structure is set to KSATTRIBUTEID_AUDIOSIGNALPROCESSING_MODE.

Audio Effects

The following audio effects are available for use in Windows 10.

| Audio effect | Description |

|---|---|

| Acoustic Echo Cancellation (AEC) | Acoustic Echo Cancellation (AEC) improves audio quality by removing echo, after it after it is already present in the audio stream. |

| Noise Suppression (NS) | Noise Suppression (NS) suppresses noise such as humming and buzzing, when it is present in the audio stream. |

| Automatic Gain Control (AGC) | Automatic Gain Control (AGC) - is designed to provide a controlled signal amplitude at its output, despite variation of the amplitude in the input signal. The average or peak output signal level is used to dynamically adjust the input-to-output gain to a suitable value, enabling a stable level of output, even with a wide range of input signal levels. |

| Beam Forming (BF) | Beam Forming (BF) is a signal processing technique used for directional signal transmission or reception. This is achieved by combining elements in a phased array in such a way that signals at particular angles experience constructive interference while others experience destructive interference. The improvement compared with omnidirectional reception/transmission is known as the receive/transmit gain (or loss). |

| Constant Tone Removal | Constant tone removal is used to attenuate constant background noise such as tape hiss, electric fans or hums. |

| Equalizer | The Equalizer effect is used to alter the frequency response of an audio system using linear filters. This allows for different parts of the signal to be boosted, similar to a treble or bass setting. |

| Loudness Equalizer | The loudness equalizer effect reduces perceived volume differences by leveling the audio output so that louder and quieter sounds are closer to an average level of loudness. |

| Bass Boost | In systems such as laptops that have speakers with limited bass capability, it is sometimes possible to increase the perceived quality of the audio by boosting the bass response in the frequency range that is supported by the speaker. Bass boost improves sound on mobile devices with very small speakers by increasing gain in the mid-bass range. |

| Virtual Surround | Virtual surround uses simple digital methods to combine a multichannel signal into two channels. This is done in a way that allows the transformed signal to be restored to the original multichannel signal, using the Pro Logic decoders that are available in most modern audio receivers. Virtual surround is ideal for a system with a two-channel sound hardware and a receiver that has a surround sound enhancement mechanism. |

| Virtual Headphones | Virtualized surround sound allows users who are wearing headphones to distinguish sound from front to back as well as from side to side. This is done by transmitting spatial cues that help the brain localize the sounds and integrate them into a sound field. This has the effect of making the sound feel like it transcends the headphones, creating an 'outside-the-head' listening experience. This effect is achieved by using an advanced technology called Head Related Transfer Functions (HRTF). HRTF generates acoustic cues that are based on the shape of the human head. These cues not only help listeners to locate the direction and source of sound but it also enhances the type of acoustic environment that is surrounding the listener. |

| Speaker Fill | Most music is produced with only two channels and is, therefore, not optimized for the multichannel audio equipment of the typical audio or video enthusiast. So having music emanate from only the front-left and front-right loudspeakers is a less-than-ideal audio experience. Speaker fill simulates a multichannel loudspeaker setup. It allows music that would otherwise be heard on only two speakers to be played on all of the loudspeakers in the room, enhancing the spatial sensation. |

| Room Correction | Room correction optimizes the listening experience for a particular location in the room, for example, the center cushion of your couch, by automatically calculating the optimal combination of delay, frequency response, and gain adjustments. The room correction feature better matches sound to the image on the video screen and is also useful in cases where desktop speakers are placed in nonstandard locations. room correction processing is an improvement over similar features in high-end receivers because it better accounts for the way in which the human ear processes sound. Calibration is performed with the help of a microphone, and the procedure can be used with both stereo and multichannel systems. The user places the microphone where the user intends to sit and then activates a wizard that measures the room response. The wizard plays a set of specially designed tones from each loudspeaker in turn, and measures the distance, frequency response, and overall gain of each loudspeaker from the microphone's location. |

| Bass Management | There are two bass management modes: forward bass management and reverse bass management. Forward bass management filters out the low frequency content of the audio data stream. The forward bass management algorithm redirects the filtered output to the subwoofer or to the front-left and front-right loudspeaker channels, depending on the channels that can handle deep bass frequencies. This decision is based on the setting of the LRBig flag. To set the LRBig flag, the user uses the Sound applet in Control Panel to access the Bass Management Settings dialog box. The user selects a check box to indicate, for example, that the front-right and front-left speakers are full range and this action sets the LRBig flag. To clear this flag, select the check box. Reverse bass management distributes the signal from the subwoofer channel to the other output channels. The signal is directed either to all channels or to the front-left and front-right channels, depending on the setting of the LRBig flag. This process uses a substantial gain reduction when mixing the subwoofer signal into the other channels. The bass management mode that is used depends on the availability of a subwoofer and the bass-handling capability of the main speakers. In Windows, the user provides this information via the Sound applet in Control Panel. |

| Environmental Effects | Environmental effects work to increase the reality of audio playback by more accurately simulating real-world audio environments. There are a number of different environments that you can select, for example 'stadium' simulates the acoustics of a sports stadium. |

| Speaker Protection | The purpose of speaker protection is to suppress resonant frequencies that would cause the speakers to do physical harm to any of the PCs' system components. For example, some physical hard drives can be damaged by playing a loud sound at just the right frequency. Secondarily, speaker protection works to minimize damage to speakers, by attenuating the signal, when it exceeds certain values. |

| Speaker Compensation | Some speakers are better at reproducing sound than others. For example, a particular speaker may attenuate sounds below 100 Hz. Sometimes audio drivers and firmware DSP solutions have knowledge about the specific performance characteristics of the speakers they are playing to, and they can add processing designed to compensate for the speaker limitations. For example, an endpoint effect (EFX) could be created that applies gain to frequencies below 100 Hz. This effect, when combined with the attenuation in the physical speaker, results in enhanced audio fidelity. |

| Dynamic Range Compression | Dynamic range compression amplifies quiet sounds by narrowing or 'compressing' an audio signal's dynamic range. Audio compression amplifies quiet sounds which are below a certain threshold while loud sounds remain unaffected. |

The #define statements shown here, are available in the KSMedia.h header file.

DEFAULT

RAW

ACOUSTIC ECHO CANCELLATION

NOISE SUPPRESSION

AUTOMATIC GAIN CONTROL

BEAMFORMING

CONSTANT TONE REMOVAL

EQUALIZER

LOUDNESS EQUALIZER

BASS BOOST

VIRTUAL SURROUND

VIRTUAL HEADPHONES

ROOM CORRECTION

BASS MANAGEMENT

ENVIRONMENTAL EFFECTS

SPEAKER PROTECTION

SPEAKER COMPENSATION

DYNAMIC RANGE COMPRESSION

-->In Windows 10 you can write a universal audio driver that will work across many types of hardware. This topics discusses the benefits of this approach as well as the differences between different platforms. In addition to the Universal Windows drivers for audio, Windows continues to support previous audio driver technologies, such as WDM.

Getting Started with Universal Windows drivers for Audio

IHVs can develop a Universal Windows driver that works on all devices (desktops, laptops, tablets, phones). This can reduces development time and cost for initial development and later code maintenance.

These tools are available to develop Universal Windows driver support:

Visual Studio 2015 Support: There is a driver setting to set “Target Platform” equal to “Universal”. For more information about setting up the driver development environment, see Getting Started with Universal Windows Drivers.

APIValidator Tool: You can use the ApiValidator.exe tool to verify that the APIs that your driver calls are valid for a Universal Windows driver. This tool is part of the Windows Driver Kit (WDK) for Windows 10, and runs automatically if you are using Visual Studio 2015 . For more information, see Validating Universal Windows Drivers.

Updated DDI reference documentation: The DDI reference documentation is being updated to indicate which DDIs are supported by Universal Windows drivers. For more information, see Audio Devices Reference.

Create a Universal Audio Driver

For step-by-step guidance, see Getting Started with Universal Windows Drivers. Here is a summary of the steps:

Load the universal audio sysvad sample to use as starting point for your universal audio driver. Alternatively, start with the empty WDM driver template and add in code from the universal sysvad sample as needed for your audio driver.

In the project properties, set Target Platform to 'Universal'.

Create an installation package: If your target is device running Windows 10 for desktop editions (Home, Pro, Enterprise, and Education), use a configurable INF file. If your target is device running Windows 10 Mobile, use PkgGen to generate an .spkg file.

Build, install, deploy, and debug the driver for Windows 10 for desktop editions or Windows 10 Mobile.

Sample Code

Sysvad and SwapAPO have been converted to be Universal Windows driver samples. For more information, see Sample Audio Drivers.

Available Programming Interfaces for Universal Windows drivers for Audio

Starting with Windows 10, the driver programming interfaces are part of OneCoreUAP-based editions of Windows. By using that common set, you can write a Universal Windows driver. Those drivers will run on both Windows 10 for desktop editions and Windows 10 Mobile, and other Windows 10 versions.

The following DDIs to are available when working with universal audio drivers.

Convert an Existing Audio Driver to a Universal Windows driver

Follow this process to convert an existing audio driver to a Universal Windows driver.

Determine whether your existing driver calls will run on OneCoreUAP Windows. Check the requirements section of the reference pages. For more information see Audio Devices Reference.

Recompile your driver as a Universal Windows driver. In the project properties, set Target Platform to 'Universal'.

Use the ApiValidator.exe tool to verify that the DDIs that your driver calls are valid for a Universal Windows driver. This tool is part of the Windows Driver Kit (WDK) for Windows 10, and runs automatically if you are using Visual Studio 2015. For more information, see Validating Universal Windows Drivers.

If the driver calls interfaces that are not part of OneCoreUAP, compiler displays errors.

Replace those calls with alternate calls, or create a code workaround, or write a new driver.

Creating a componentized audio driver installation

Overview

To create a smoother and more reliable install experience and to better support component servicing, divide the driver installation process into the following components.

- DSP (if present) and Codec

- APO

- OEM Customizations

Optionally, separate INF files can be used for the DSP and Codec.

This diagram summarizes a componentized audio installation.

A separate extension INF file is used to customize each base driver component for a particular system. Customizations include tuning parameters and other system-specific settings. For more information, seeUsing an Extension INF File.

An extension INF file must be a universal INF file. For more information, see Using a Universal INF File.

For information about adding software using INF files, see Using a Component INF File.

Submitting componentized INF files

APO INF packages must be submitted to the Partner Center separately from the base driver package. For more information about creating packages, see Windows HLK Getting Started.

SYSVAD componentized INF files

To see an example of componentized INF files examine the sysvad/TabletAudioSample, on Github.

| File name | Description |

|---|---|

| ComponentizedAudioSample.inf | The base componentized sample audio INF file. |

| ComponentizedAudioSampleExtension.inf | The extension driver for the sysvad base with additional OEM customizations. |

| ComponentizedApoSample.inf | An APO sample extension INF file. |

The traditional INF files continue to be available in the SYSVAD sample.

| File name | Description |

|---|---|

| tabletaudiosample.inf | A desktop monolitic INF file that contains all of the information needed to install the driver. |

APO vendor specific tuning parameters and feature configuration

All APO vendor system specific settings, parameters, and tuning values must be installed via an extension INF package. In many cases, this can be performed in a simple manner with the INF AddReg directive. In more complex cases, a tuning file can be used.

Base driver packages must not depend on these customizations in order to function (although of course functionality may be reduced).

UWP Audio Settings APPs

To implement an end user UI, use a Hardware Support App (HSA) for a Windows Universal Audio driver. For more information, see Hardware Support App (HSA): Steps for Driver Developers.

Programmatically launching UWP Hardware Support Apps

To programmatically launch a UWP Hardware Support App, based on a driver event (for example, when a new audio device is connected), use the Windows Shell APIs. The Windows 10 Shell APIs support a method for launching UWP UI based on resource activation, or directly via IApplicationActivationManager. You can find more details on automated launching for UWP applications in Automate launching Windows 10 UWP apps.

APO and device driver vendor use of the AudioModules API

The Audio Modules API/DDI is designed to standardize the communication transport (but not the protocol) for commands passed between a UWP application or user-mode service to a kernel driver module or DSP processing block. Audio Modules requires a driver implementing the correct DDI to support module enumeration and communication. The commands are passed as binary and interpretation/definition is left up to the creator.

Audio Modules is not currently designed to facilitate direct communication between a UWP app and a SW APO running in the audio engine.

D-day

For more information about audio modules, see Implementing Audio Module Communication and Configure and query audio device modules.

APO HWID strings construction

APO Hardware IDs incorporate both standard information and vendor-defined strings.

D-mannose

They are constructed as follows:

Where:

- v(4) is the 4-character identifier for the APO device vendor. This will be managed by Microsoft.

- a(4) is the 4-character identifier for the APO, defined by the APO vendor.

- n(4) is the 4-character PCI SIG-assigned identifier for the vendor of the subsystem for the parent device. This is typically the OEM identifier.

- s(4) is the 4-character vendor-defined subsystem identifier for the parent device. This is typically the OEM product identifier.

Plug and Play INF version and date evaluation for driver update

The Windows Plug and Play system evaluates the date and the driver version to determine which drive to install when multiple drivers exist. For more information, see How Windows Ranks Drivers.

D Jd F

To allow the latest driver to be used, be sure and update the date and version, for each new version of the driver.

D-dimer Test

APO driver registry key

D. B. Woodside

For third party-defined audio driver/APO registry keys, use HKR with the exception of HKLMSystemCurrentControlSet.

Use a Windows Service to facilitate UWP <-> APO communication

A Windows Service is not strictly required for management of user-mode components like APOs, however, if your design includes an RPC server to facilitate UWP <-> APO communication, we recommend implementing that functionality in a Windows Service that then controls the APO running in the audio engine.

Building the Sysvad Universal Audio Sample for Windows 10 Desktop

D&d Beyond

Complete the following steps to build the sysvad sample for Windows 10 desktop.

D'arcy Carden

Locate the desktop inf file (tabletaudiosample.inf) and set the manufacturer name to a value such as 'Contoso'

In Solution Explorer, select and hold (or right-click) Solution 'sysvad' , and choose Configuration Manager. If you are deploying to a 64 bit version of Windows, set the target platform to x64. Make sure that the configuration and platform settings are the same for all of the projects.

Build the all of the projects in the sysvad solution.

Locate the output directory for the build from the build. For example it could be located in a directory like this:

Navigate to the Tools folder in your WDK installation and locate the PnpUtil tool. For example, look in the following folder: C:Program Files (x86)Windows Kits10Toolsx64PnpUtil.exe .

Copy the following files to the system that you want to install the sysvad driver:

| File | Description |

|---|---|

| TabletAudioSample.sys | The driver file. |

| tabletaudiosample.inf | An information (INF) file that contains information needed to install the driver. |

| sysvad.cat | The catalog file. |

| SwapAPO.dll | A sample driver extension for a UI to manage APOs. |

| PropPageExt.dll | A sample driver extension for a property page. |

| KeywordDetectorAdapter.dll | A sample keyword detector. |

Install and test the driver

Follow these steps to install the driver using the PnpUtil on the target system.

Open and Administrator command prompt and type the following in the directory that you copied the driver files to.

pnputil -i -a tabletaudiosample.inf

The sysvad driver install should complete. If there are any errors you can examine this file for additional information:

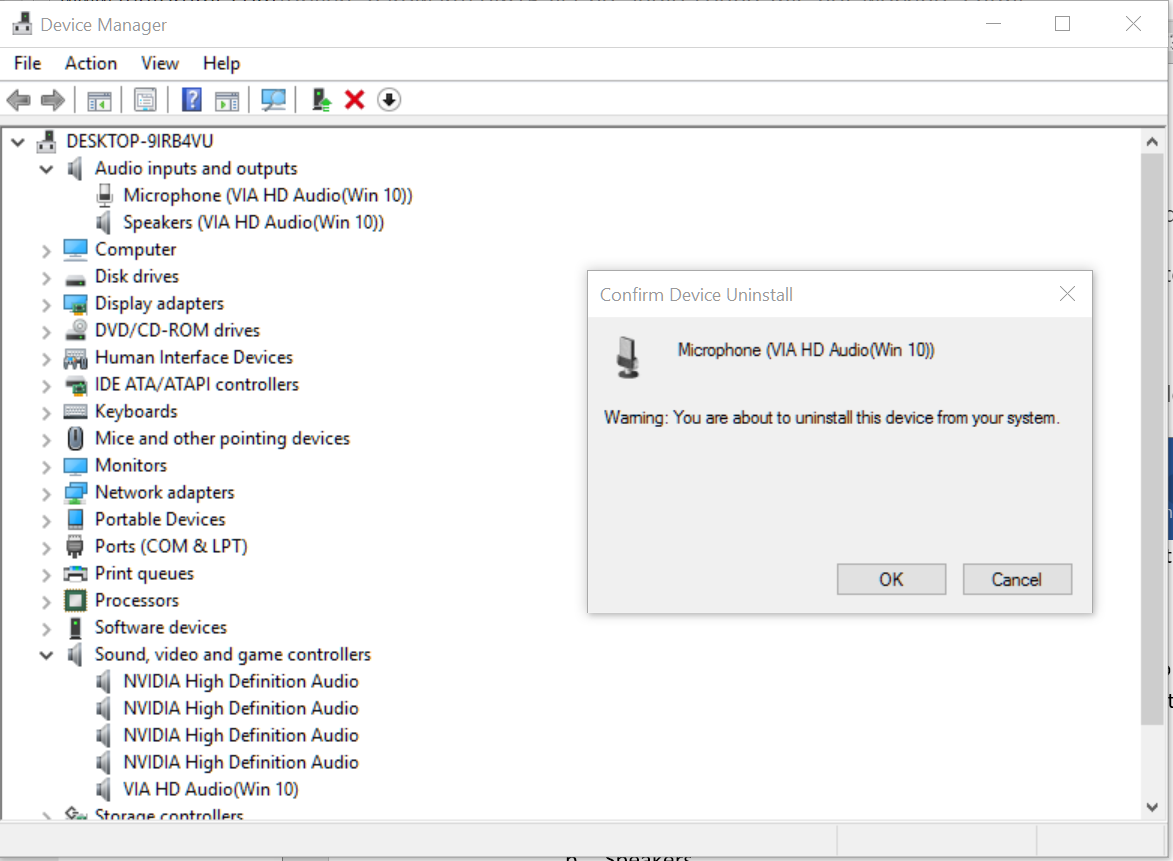

%windir%infsetupapi.dev.logIn Device Manager, on the View menu, choose Devices by type. In the device tree, locate Microsoft Virtual Audio Device (WDM) - Sysvad Sample. This is typically under the Sound, video and game controllers node.

On the target computer, open Control Panel and navigate to Hardware and Sound > Manage audio devices. In the Sound dialog box, select the speaker icon labeled as Microsoft Virtual Audio Device (WDM) - Sysvad Sample, then select Set Default, but do not select OK. This will keep the Sound dialog box open.

Locate an MP3 or other audio file on the target computer and double-click to play it. Then in the Sound dialog box, verify that there is activity in the volume level indicator associated with the Microsoft Virtual Audio Device (WDM) - Sysvad Sample driver.